Understanding how ChatGPT works is like trying to understand how a brilliant but invisible mind operates behind the scenes of a flowing conversation. To most users, ChatGPT appears as an intelligent, well-read assistant that can write poetry, code software, explain quantum mechanics, or even roleplay as a historical character. But underneath that friendly interface lies a meticulously engineered technological marvel, built on decades of research in machine learning, natural language processing (NLP), and artificial intelligence.

At the heart of ChatGPT lies a transformer-based neural network architecture—a system that doesn’t “understand” language the way humans do but is incredibly good at predicting and generating text in a way that feels intelligent. Let’s unravel the layers, one technical concept at a time.

1. ChatGPT Is Built on the Transformer Architecture

The backbone of ChatGPT is the Transformer, a neural network architecture introduced in the seminal 2017 paper “Attention Is All You Need” by Vaswani et al. Unlike older models such as RNNs (Recurrent Neural Networks) or LSTMs (Long Short-Term Memory), which process words one at a time in sequence, Transformers allow the model to see the entire sentence (or paragraph) at once. This enables the system to understand long-range dependencies in a much more efficient and scalable way.

- Transformers use self-attention: This mechanism allows each word in a sentence to “look at” every other word and determine how relevant they are to one another. For example, in the sentence “The cat that the dog chased was black,” self-attention helps the model figure out that “cat” is the one that “was black,” not “dog.”

- Positional encoding adds order: Since Transformers process all words in parallel, they don’t inherently know the position of each word. To preserve the sequence of language, positional encodings are added to each word’s vector to indicate its position in the sentence.

- Multi-head attention enables nuanced understanding: The model doesn’t just look at the sentence in one way; it uses multiple attention heads to analyze the sentence from different perspectives simultaneously.

2. It’s Trained on Massive Amounts of Text Data

Before ChatGPT can talk like a human, it has to read like one—on steroids. GPT (Generative Pre-trained Transformer) models are trained on a vast amount of text from books, websites, articles, forums, code repositories, and more. This helps it learn patterns, grammar, factual knowledge, and even stylistic nuances.

- Pretraining phase: During pretraining, the model is shown massive amounts of unlabeled text and is trained to predict the next word in a sentence. For example, given the sentence “The sun rises in the ___,” the model learns to predict “east.” This is known as causal (or autoregressive) language modeling.

- Billions of parameters: GPT-3 has 175 billion parameters—these are weights in the neural network that get adjusted during training to better predict words. GPT-4 is likely even larger (though its exact size is undisclosed), resulting in better understanding, coherence, and reasoning capabilities.

- Tokenization: Before training, text is broken down into “tokens,” which are smaller chunks of words or characters. Tokenization ensures that the model processes language in manageable pieces. For example, “ChatGPT is cool” might be tokenized into [“Chat”, “G”, “PT”, “ is”, “ cool”].

3. How the Model Generates a Response During Inference

When you type a question into ChatGPT, what happens next is a complex interplay of math and probabilities. This phase is called inference—the model is no longer learning but using what it has already learned to generate answers.

- Prompt as input: The text you type (your prompt) is first tokenized and passed through the model. It is embedded into high-dimensional vector space, where semantic meanings and relationships are encoded mathematically.

- Prediction using softmax: For each token, the model calculates a probability distribution over all the words in its vocabulary using the softmax function. It picks the most likely next token based on this distribution.

- Sampling techniques:

- Greedy decoding picks the most likely next word each time, but can be repetitive or bland.

- Top-k sampling limits predictions to the top-k most likely words.

- Nucleus sampling (top-p) chooses from the smallest set of words whose total probability is at least p (e.g., 90%).

- These strategies add diversity and creativity to the model’s outputs.

- Context window limits: GPT models can only “see” a certain number of tokens at once (e.g., GPT-3 can handle 2,048 tokens; GPT-4-turbo handles 128k tokens). If your conversation exceeds this limit, earlier parts are truncated.

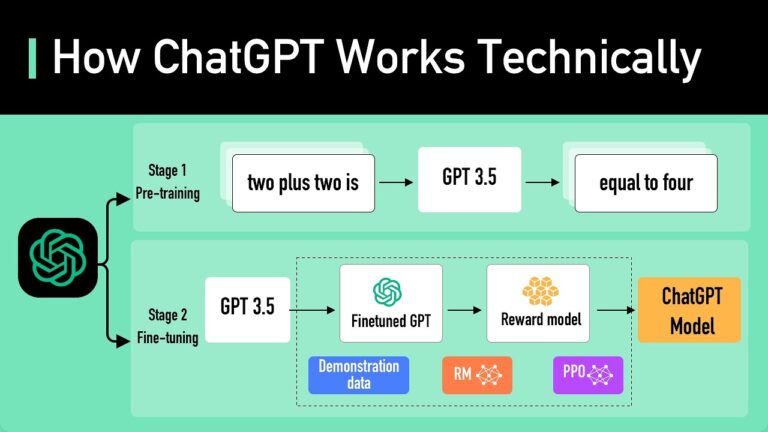

4. Fine-Tuning and Reinforcement Learning Make It Safer

After the base GPT model is pretrained, it undergoes additional processes to make it safer, more helpful, and aligned with human values. This is where ChatGPT is born from the raw GPT.

- Supervised fine-tuning: Human trainers write high-quality examples of question-answer pairs. The model is fine-tuned on this curated data to learn how to respond politely, factually, and helpfully.

- Reinforcement Learning from Human Feedback (RLHF):

- Trainers rate multiple model outputs for the same prompt.

- These rankings are used to train a reward model.

- The base model is then fine-tuned using Proximal Policy Optimization (PPO), a reinforcement learning algorithm, to maximize the reward.

- This results in more aligned and user-friendly responses.

- Safety filters and moderation: OpenAI applies additional safety layers that detect and filter out harmful, biased, or inappropriate content before it’s shown to the user.

5. Multimodal Capabilities in Advanced Versions

While the original GPT models could only handle text, newer versions like GPT-4 are multimodal—they can understand and generate both text and images. This enables users to upload diagrams, photos, or visual puzzles and receive intelligent responses.

- Image inputs are encoded using vision encoders that transform images into embeddings.

- Unified architecture allows shared reasoning across modalities.

- This marks a step toward Artificial General Intelligence (AGI), where an AI model isn’t just a text generator but a versatile system that can interpret the world holistically.

6. What ChatGPT Doesn’t Do (But You Might Think It Does)

Despite its powerful capabilities, it’s important to understand the limitations of how ChatGPT works.

- No real understanding or consciousness: The model doesn’t “understand” language—it statistically associates words based on patterns in training data.

- No memory (unless explicitly provided): ChatGPT doesn’t remember past conversations unless memory is enabled or passed back through the prompt.

- Not connected to the internet: It doesn’t browse the web in real-time unless connected to tools like a browser (e.g., in ChatGPT Plus with browsing enabled).

- Prone to hallucinations: Sometimes the model can generate plausible-sounding but false or nonsensical information.

Conclusion: ChatGPT Is a Mathematical Language Prediction Engine, Not a Thinking Being

In essence, ChatGPT works like a massive, probability-driven word engine that strings together responses based on everything it has learned from reading vast amounts of text. It doesn’t think, feel, or comprehend the world as we do—but it mimics those behaviors extremely well because it has been optimized to predict language in context. Its brilliance is not in true intelligence but in statistical mastery of language. Through billions of parameters, attention heads, and probability calculations, it generates responses that are useful, coherent, and often surprisingly creative.

What makes ChatGPT revolutionary isn’t that it knows everything—but that it turns static, pre-learned knowledge into dynamic, real-time conversations. It’s a mirror of human language—a reflection of everything we’ve ever written, spoken, or shared—and it’s accessible at your fingertips, ready to assist, ideate, or collaborate.

As this technology continues to evolve—incorporating more modalities, longer context windows, and better alignment with human goals—it will become not just a tool for answering questions, but a companion for imagination, innovation, and exploration.

You may also like