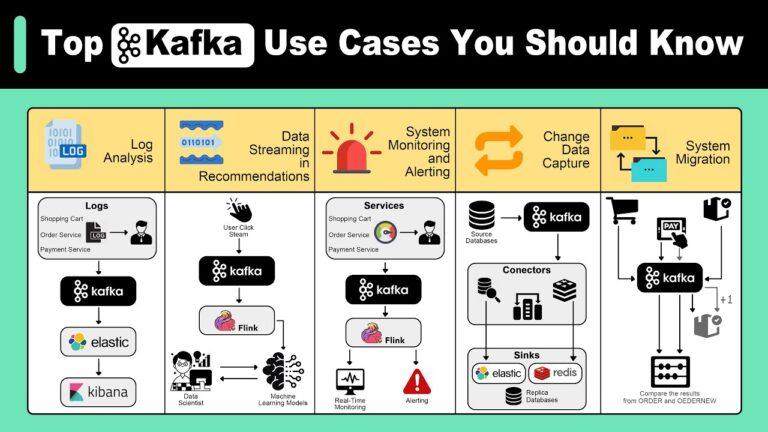

Top Kafka Use Cases You Must Know: A Comprehensive Guide

Apache Kafka is one of the most powerful and widely adopted open-source distributed event-streaming platforms. Developed initially by LinkedIn and later open-sourced, Kafka’s ability to handle large volumes of real-time data streams and process them efficiently has made it indispensable for many modern enterprises. Whether it’s for real-time data integration, distributed system coordination, or building microservices, Kafka excels at handling high-throughput, low-latency data pipelines. This detailed article explores the most significant Kafka use cases, demonstrating how businesses leverage it to optimize performance, scalability, and resilience in their systems.

1. Real-Time Data Streaming and Analytics

Use Case Overview

Real-time data streaming has become a critical component of modern applications where instantaneous processing is required to make data-driven decisions. In industries like finance, e-commerce, and telecommunications, companies need to process, analyze, and react to data in milliseconds to deliver services or detect issues quickly. Kafka’s architecture, built for low-latency streaming and horizontal scalability, provides the backbone for processing and analyzing streams in real-time.

Why Kafka?

- Low Latency and High Throughput: Kafka supports the real-time transmission of massive amounts of data at low latency and high throughput.

- Stream Processing: With Kafka Streams and integrations with tools like Apache Flink or Apache Storm, businesses can process and transform data as it flows through Kafka topics.

- Data Parallelism: Kafka’s partitioning mechanism allows parallel processing of data, enabling higher processing speeds and distributed workloads.

Example

An e-commerce company might use Kafka to monitor customer behavior on its website in real-time, tracking clicks, add-to-cart actions, and purchases. By processing this data stream instantly, they can adjust product recommendations and personalize offers, boosting conversion rates.

2. Event-Driven Architectures and Microservices

Use Case Overview

With the rise of microservices architectures, managing the interactions between various services is essential. In a microservices ecosystem, each service is designed to be loosely coupled and operates independently. Kafka serves as the event bus that facilitates communication between these services, allowing them to publish and consume events asynchronously.

Why Kafka?

- Asynchronous Communication: Kafka enables microservices to communicate through events without needing synchronous API calls, reducing system dependencies.

- Decoupled Services: Kafka ensures that services remain decoupled. Services can evolve independently as long as they agree on the structure of the events passed between them.

- Scalability: Kafka’s ability to handle millions of messages per second allows it to manage communication in a large-scale, microservices-based architecture.

Example

A ride-hailing company might use Kafka in its microservices architecture. When a user requests a ride, the ride service publishes an event to Kafka. The payment service, notification service, and driver service then consume that event to execute their respective processes, such as charging the user, sending updates, and assigning a driver.

3. Log Aggregation and Centralized Logging

Use Case Overview

In distributed systems, logs are generated from multiple services and servers. To monitor, debug, and audit activities across a system, businesses need a centralized solution to collect and analyze logs. Kafka can act as a central hub to aggregate logs from different sources and pass them to analytics and monitoring systems.

Why Kafka?

- Scalable Logging Pipeline: Kafka can handle logs from thousands of servers and applications, offering a scalable solution for centralized logging.

- Integration with Monitoring Tools: Kafka can be integrated with log analysis tools like Elasticsearch, Logstash, and Kibana (ELK stack), enabling real-time log monitoring and alerting.

- Resilience and Durability: Kafka’s distributed and fault-tolerant architecture ensures that logs are retained and accessible even during system failures.

Example

A tech company managing a large-scale cloud platform uses Kafka to centralize logs from all of its microservices. These logs are then fed into Elasticsearch for real-time searching and monitoring, allowing the DevOps team to detect and respond to issues faster.

4. Real-Time Fraud Detection

Use Case Overview

Fraud detection systems need to analyze streams of transactions or user behavior in real-time to flag potential fraudulent activities. Kafka plays a key role in handling these high-speed data streams, enabling companies to identify anomalies and prevent fraudulent transactions before they are completed.

Why Kafka?

- Real-Time Stream Processing: Kafka allows companies to process massive volumes of transactions in real-time and apply machine learning models to detect fraud patterns.

- Low Latency: Kafka’s architecture ensures that there’s minimal delay in transmitting data, which is crucial for time-sensitive fraud detection systems.

- Scalability: Kafka can easily scale to meet the high throughput demands of fraud detection in industries like banking and e-commerce.

Example

A global bank might use Kafka to monitor credit card transactions. Each transaction event is streamed to Kafka, where it is processed by a machine learning model in real-time to detect anomalies such as unusual spending patterns, location changes, or high-risk purchases. If suspicious activity is detected, the system can automatically flag or block the transaction.

5. Stream Processing for Real-Time Data Pipelines

Use Case Overview

Kafka’s stream processing capabilities allow businesses to transform, aggregate, filter, and enrich data in real time. By utilizing Kafka Streams, Flink, or other processing frameworks, businesses can create sophisticated real-time data pipelines.

Why Kafka?

- In-Built Stream Processing: Kafka Streams API enables processing of streams directly within Kafka, making it a perfect fit for real-time pipeline creation.

- Integration with Multiple Data Sources: Kafka can ingest data from multiple sources, such as databases, sensors, and user applications, and then apply transformations in real-time.

- Fault Tolerance: Kafka’s distributed nature ensures that data pipelines remain resilient, even in the face of node or service failures.

Example

A telecommunications company might use Kafka for real-time monitoring of network traffic. Kafka ingests data from network devices and sensors, which is then processed in real-time to detect potential outages, optimize bandwidth, or perform load balancing.

6. Data Integration and ETL (Extract, Transform, Load)

Use Case Overview

Kafka is increasingly used as a backbone for ETL processes, where data is ingested from multiple sources, transformed, and then loaded into a data warehouse or lake for further analysis. Kafka Connect provides the framework for integrating Kafka with various databases, message queues, and systems.

Why Kafka?

- Seamless Data Integration: Kafka Connect enables easy integration with various data sources and sinks, allowing for the continuous extraction and loading of data.

- Real-Time Transformations: Kafka Streams allows for on-the-fly data transformation during the extraction process, removing the need for additional transformation steps later.

- Scalability and Performance: Kafka’s architecture ensures high scalability and efficiency in handling large-scale ETL jobs across distributed systems.

Example

A retail company with multiple data sources (e.g., sales data, inventory levels, and customer feedback) uses Kafka to collect, transform, and load this data into a central data warehouse in real-time. This allows for immediate analysis of key business metrics like sales trends and inventory management.

7. Real-Time Recommendations and Personalization

Use Case Overview

Personalized recommendations play a vital role in driving user engagement and increasing sales in e-commerce and content platforms. By using Kafka, businesses can process user behavior in real-time and generate recommendations that are timely and relevant.

Why Kafka?

- Real-Time Data Processing: Kafka can process large volumes of user behavior data in real-time, making it ideal for generating up-to-the-minute personalized recommendations.

- Integration with Machine Learning Models: Kafka can be integrated with machine learning systems that analyze user data and provide insights for dynamic recommendations.

- Scalability: Kafka’s partitioning mechanism enables it to handle millions of events from users browsing products or content simultaneously.

Example

A video streaming platform might use Kafka to analyze a user’s viewing history and interaction data to generate real-time recommendations for what to watch next. As users continue to engage with content, the recommendations are dynamically updated, increasing engagement.

8. IoT Data Collection and Processing

Use Case Overview

The Internet of Things (IoT) generates an enormous amount of data from connected devices such as sensors, cameras, and smart appliances. Kafka is ideal for collecting, processing, and analyzing this data at scale, often in real-time.

Why Kafka?

- Massive Scalability: Kafka can handle large volumes of IoT data generated by thousands or even millions of devices.

- Real-Time Data Processing: Kafka’s ability to ingest and process data in real-time makes it essential for IoT use cases where immediate action is needed, such as in industrial automation or smart homes.

- Resilience: Kafka’s fault tolerance ensures that data from IoT devices is collected and processed reliably, even in case of network or system failures.

Example

An energy company might use Kafka to collect data from thousands of smart meters installed in homes and businesses. The data is analyzed in real-time to optimize energy distribution, detect faults in the grid, and even alert customers to abnormal energy consumption.

Conclusion

Kafka’s ability to handle real-time data streams, coupled with its scalability and fault tolerance, makes it a valuable asset in various industries. From event-driven architectures and microservices to real-time fraud detection and IoT data processing, Kafka’s flexibility and efficiency ensure that it plays a key role in modern data-driven applications. As businesses continue to evolve and adopt digital-first approaches, Kafka will remain integral in enabling scalable, resilient systems capable of handling vast amounts of data with minimal latency. Its wide array of use cases showcases Kafka’s versatility, allowing businesses to not only process data in real-time but also to adapt to evolving technological landscapes. As companies continue to innovate and strive for greater efficiency, Kafka will undoubtedly play a critical role in their data infrastructure, serving as the backbone for reliable data streaming, integration, and processing.

Whether it’s for building responsive microservices, enabling real-time personalization, or creating scalable IoT ecosystems, Kafka has become an indispensable tool for handling today’s data-intensive demands. Its strength lies in its ability to balance high throughput with low latency, all while providing the resilience needed in distributed systems. As the digital economy grows, the demand for real-time data pipelines will only increase, solidifying Kafka’s position as a leader in the event streaming space.

As enterprises look for ways to stay competitive in a data-driven world, understanding and leveraging Kafka’s full potential across these diverse use cases will be essential for long-term success. Kafka’s power lies not just in its technical capabilities but in how it transforms raw data into actionable insights, enabling businesses to innovate, react, and scale like never before. Whether you’re working in finance, e-commerce, telecommunications, or IoT, Kafka’s ability to efficiently manage and process vast streams of data is vital for modern-day business operations.

In summary, Apache Kafka is more than just a message broker; it’s a foundational technology for any organization seeking to capitalize on real-time data streams. With Kafka, companies can unlock new levels of operational efficiency, customer engagement, and data-driven decision-making, positioning themselves at the forefront of technological advancements.

Understanding Kafka’s diverse applications and use cases can help businesses harness its full potential. Whether you’re just beginning your Kafka journey or looking to refine an existing implementation, it’s clear that Kafka will continue to shape the future of data processing and real-time analytics. By embracing this technology, organizations not only improve their operational efficiency but also gain the competitive edge needed in a rapidly evolving digital landscape.

Kafka is not just a tool for today—it is a critical enabler of the future, allowing businesses to grow, innovate, and thrive in a world that demands instantaneous processing of complex data streams.

You may also like the below